As with, I’m sure, many people, Mandrill’s recent changes found me looking to jump ship. Oh well, it was great while it lasted. Since we already use a number of AWS services, it made a sort of eggs-all-in-one-basket sense to switch to SES as our replacement.

Mandrill to SES, you say. That’s no problem, just switching from one API to another. Amazon even wrote a post on it.

But here’s the thing — we have a slew of servers sending emails generated from templates, and I wanted those templates all in stored in one spot. You know, for easier management. We’ll call it the Great Template Unification, or GTU for short.

You don’t have to call it that.

A certain amount of checkability was also important — so we could verify if and when an email was sent, and that it had gone through SES successfully.

What follows is a basic description of the email system I set up, for, you know, moving to SES. It involves SES, S3, and Lambda, and it’s probably overkill. But hey, that’s what computer science is all about, right?

Three amazon services, that must be expensive! Turns out, since we don’t send all that many emails, it’s mostly just s3 costs (which should be quite low).

- The first million Lambda requests per month are free. We don’t get anywhere close to that with emails, although we do run a few other lambdas. Assuming we’re already over a million, it still shouldn’t add up to much.

- With SES, 62,000 emails per month are free. We average less than 500.

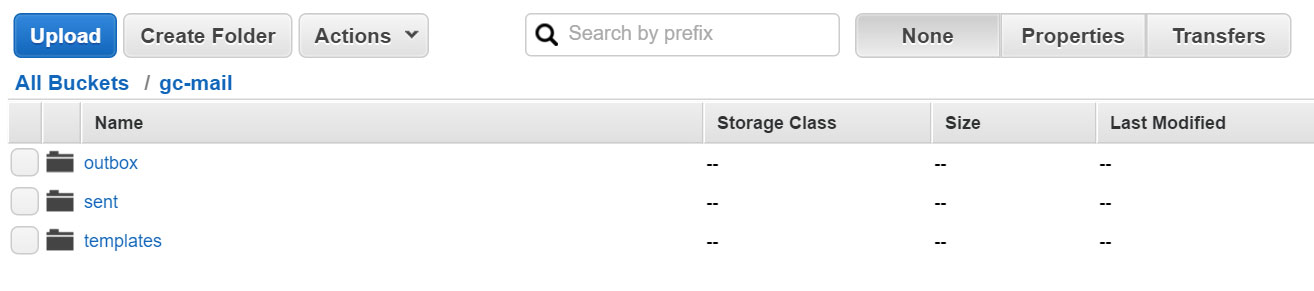

The S3 bucket convention

An email system deserves its own bucket, at least IMHO — s3://gc-mail, for example.

There are three “folders” (or prefixes, if you insist): outbox, sent, and templates.

Outbox is where emails waiting to be sent are stored. To send an email, we’ll just upload a JSON file here. How will the actual sending occur, you ask? We’ll set up a lambda to take care of that. I added a lifecycle rule to remove files from this directory after 365 days — because, you know, why not?

Once an email is successfully sent via SES, the JSON file will be moved to the sent directory, where I’ve set up a lifecycle rule to remove files older than 7 days.

Templates is where the template HTML files are held. For our purposes these are just HTML files copy and pasted from our Mandrill templates.

Sending the emails

Wait, but what’s this json file I upload? In our case, it’s just the JSON encoded version of the object we’d otherwise send to the AWS SES SDK, with a few extra fields to let us know which template to use, and what data to insert. There’s some flexibility built in, but that’s the gist of it. Here’s an example:

{

"Destination": {

"ToAddresses": [

"you@yourdomain.com",

]

},

"Template": "gc-notification",

"Message": {

"Body": {

"Html": {

"Data": {

"server": "gigglingcorpse.com",

"report": "Hey man, just checking in. Hit me back if you want."

}

}

},

"Subject": {

"Data": "Gigglingcorpse test email"

}

},

"Source": "internal@gigglingcorpse.com"

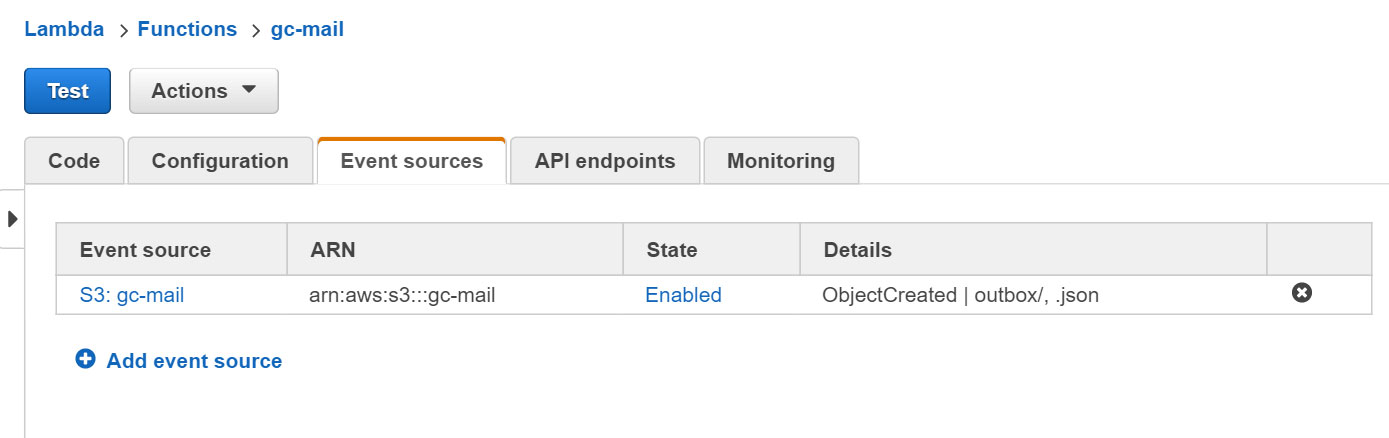

}I have a lambda set up to listen for PUTs on the bucket with the prefix outbox/ and the suffix .json.

It parses the uploaded file, downloads the appropriate template, inserts the values, and passes it along to SES. If for some reason it can’t send the email, it sends me the error using the same system. I can then check that file in S3 to see what happened.

Once the email has sent, the lambda copies the file to the sent directory and deletes the original. Or, as I’m sure you’re familiar, s3’s version of moving a file.

Oh, speaking of the Lambda, it needs permission to PUT/GET/DELETE from the bucket, as well as permission to use SES’s sendmail.

Client library

To call it from our various servers, I wrote a PHP client library. It has some helper functions, but the most important one is called send(). Here’s an example of it in use:

<?php

$mailer = new GCMail($s3client);

$mailer->send(array(

'To' => 'you@yourdomain.com',

'From' => 'internal@gigglingcorpse.com',

'Template' => 'gc-notification',

'Data' => array(

'server' => 'gigglingcorpse.com',

'report' => 'This is coming from PHP'

),

'Subject' => 'TEST FROM PHP'

));

?>So now I can send templated emails fairly easily. But guys, this is AWS. You know permissions are going to be an issue.

Luckily, most of our machines are assigned roles, so we don’t have to worry about passing around or storing credentials — that all happens by way of witchcraft. All that’s left to do is make sure those roles have permission to PUT to our s3 bucket. This can be handled by adding a statement like this to their policies:

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

],

"Resource": [

"arn:aws:s3:::gc-mail\/*"

]

}The main point of irrecoverable failure in this system is getting the JSON files to s3. If the sdk fails to connect, which happens occasionally, the email won’t be sent. To account for this, I’d like to save the json files to a temporary directory when the client encounters an error, and try again later.

But, you know, maybe later.